Ever wondered how programmers know how to do everything on a computer? Well here’s an example.

I have a problem: My computer doesn’t automatically pause the music I’m listening to when I leave my desk – so lets work out how to fix that. It seems like a simple thing to do, after all when I’m sitting at my desk all I need to do is move my mouse and press the pause button.

So how are we going to approach this? Lets first break down the task. Most computer systems do two things, they wait for an event and when it comes they trigger an action, so in our case our two tasks are:

- Detect when I’m away from my computer

- Trigger the music player to pause.

First step

If we are lucky then someone has already done this work for us and therefore our first step is to find out if it exists – our friend here is the internet, we’ll call him “Google”.

Me: "Does my music player stop playing when I leave my desk?"

Google: "Hi! We've found 2.9 million results! (we know you'll be interested in hearing that your search took 0.64 seconds to complete - you're welcome!) So.... Maybe this would help.... Maybe this would help....Maybe this would help... Maybe this would help... Maybe this would help... Maybe this would help... Maybe this would help... Maybe this would help... Maybe this would help... Maybe this would help... Next page."

Me: "No helpful results. Damn it.".

Second Step

No-one else has figured out how to do this, so our next step id to figure out if it’s even possible. We first want to check whether we can pause our music player without the mouse – if you thought we should check how to detect when I’m away from my computer first, you’d be wrong – there’s no point doing that if we can’t trigger a pause without using the mouse. So now we draw on one of three things:

- Our experience: we’ve done it before we know how to do it again.

- The documentation: The people who wrote the software know how to do it and they’ll tell us.

- The internet: Our friend will know what to do because someone else told him, and now they can tell us.

If you’re in number one, great! Get coding! Unfortunately the reality is there are too many pieces of software, written in too many different ways, for too many different systems that chances are you won’t have done it before. So onward to number two, but where’s the documentation? Many, many, many years ago in preInternetian times documentation may have come in a box in what was called a book, or, if you were lucky, on cartridge/floppy/CD/DVD. Nowadays all documentation is online – go directly to number three.

Me: "How do I pause my music player without my mouse?"

Google: "Hi! We've found 4.1 million results and it just took 0.69 seconds, (I know, we're awesome!). And you lucky thing, We've picked this fantastic advert just for you - 'No hands? try "

Me: "Ah look, the documentation for my music player. "

So you’ve found the documentation – it will be one of several things:

- Too short

- Too technical

- Too long

Just right

Back to google, search properly:

Me: "How do I pause my music player without my hands? EXAMPLE"

Google: "We think you might like... Maybe this... Maybe this... Maybe this... Maybe this... Maybe this... "

Me: "Ah ha".

So now we are getting somewhere we just need to call our music player app with some extra bits “Music Player PAUSE”. Great. Hopefully it’ll work in our version of the software… So now to our other task, how to find when I’m not at my desk.

Third step

Google. Friend. Let’s talk.

Me: "Dear Google, how do I know when I am not at my desk?"

Google: "You location is here. Map. Have you seen our Google Doodle?"

Me: "Oh cool, a Google Doodle, lets see what it is. Oh, it's Larry Page's birthday, its a Google Doodle made out of other Google Doodles... so who's Larry Page..."

Google: "Larry Page's profile. You're telling me you don't know who Larry Page is? Sergey Brin's Profile. Next you'll be telling me you don't know who Sergey Brin is"

Me: "Who is Sergey Brin? Wait - wasn't I doing something?"

Google: "We always know what you are doing. Safe search on. You were looking for these ads: this one, this one, this one, this one, this one, this one, this one, this one.....

Me: "Concentrate"

Google: "A concentrate is a form of substance which has had the majority of its base component removed..."

Me: "Sigh. How does my computer know when I'm not at my desk?"

Google: "Maybe these pages will help..... (By the way, did we mention we have 2.5 gazillion results which took just 3 yoctoseconds to complete?)"

Distractions are everywhere – constant vigilance! (And a nice pair of headphones). Sometimes it’s difficult to even know what you need to “look for”. The best way to solve this one is guess, see what results you get, try some other words, and iterate until you get what you are looking for. In this particular case I should be asking “How does my operating system know when I’m idle”.

Me: "How does my operating system know when I'm idle EXAMPLE"

Google: "1.6 mil-blah, blah, try this page."

Me: "Ah, we just have to use that program, so lets read the documentation for that"

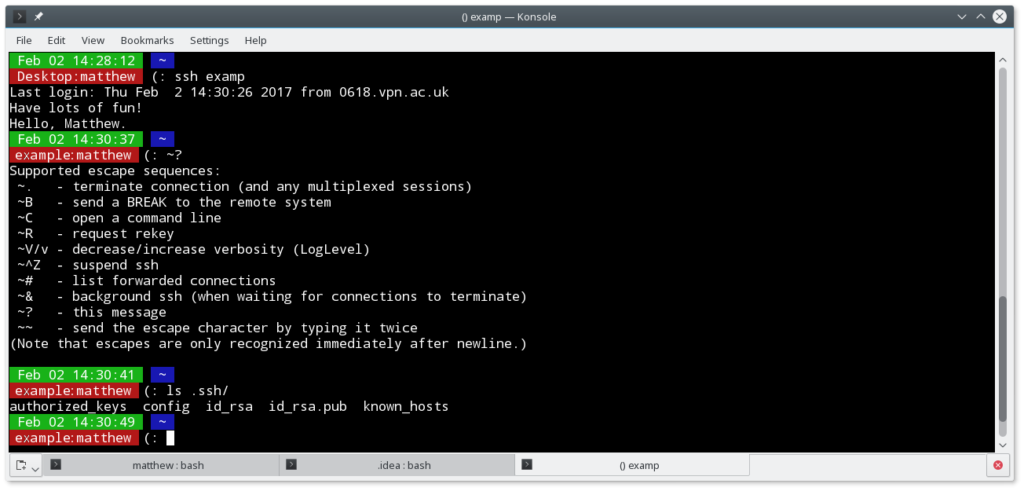

Now we know what app we need to use, we just need to attach this one to our previous one. This is where we actually start writing code…

Fourth step

So lets try our luck again. Hopefully we can simplify our code by finding someone else’s code which wraps around the apps we need. Yep, it’s another google. But first we have to decide on which programming language we will use – there are a lot of choices but it gets down to two choices:

- What did I use before?

- Is there something cooler?

If you choose the second one you’ll be working on this “simple” solution for the next month – this is supposed to be a quick thing so lets use what we are used too, (we might try the second one anyway, depends how bored, courageous, stupid we are feeling at the time). We’ll chose Python, we’ve used it before and there’s a large number of “Wrapped Apps” out there in the wild. So we add the word “Python” to our “music player” and “idle detection” searches.

Me: what?

Summary

- There’s loads of things programmers don’t know how to do.

- We are learning new things everyday.

- We search the internet a lot.

- It’s easy to get distracted.

- Programming is way more complicated than it should be.

- But it can be quite rewarding.

- It can also be very time consuming.

Notes

I’ve tried to keep the jargon at a minimal. By “app” I mean any program, application, library or piece of code” – most projects use lots of combinations of these.